Building a file storage with Next.js, PostgreSQL, and Minio S3

It is the second part of the series of articles about building a file storage with Next.js, PostgreSQL, and Minio S3. In the first part (opens in a new tab), we have set up the development environment using Docker Compose. In this part, we will build a full-stack application using Next.js, PostgreSQL, and Minio S3.

Introduction

In the early days of web development, files like images and documents were stored on the web server along with the application code. However, with increasing user traffic and the need to store large files, cloud storage services like Amazon S3 have become the preferred way to store files.

Separating the storage of files from the web server provides several benefits, including:

- Scalability and performance

- Large file support (up to 5TB)

- Cost efficiency

- Separation of concerns

In this article, we will build an example of a file storage application using Next.js, PostgreSQL, and Minio S3. There are two main ways to upload files to S3 from Next.js:

-

Using API routes to upload and download files. This is a simpler approach, but it has a limitation of 4MB, if you try to upload file more than 4MB, you will get a Next.js error "API Routes Response Size Limited to 4MB" Error in Next.js" (opens in a new tab).

-

Using presigned URLs to get temporary access to upload files and then upload files directly from frontend to S3. This approach is a little bit more complex, but it does not use resources on the Next.js server with file uploads.

Source Code

You can find the full source code for this tutorial on GitHub (opens in a new tab)

Shared code for both approaches

Some code will be shared between the two approaches like UI components, database models, utility functions, and types.

Database schema

To save information about the uploaded files, we will create a File model in the database. In the schema.prisma file, add the following model:

model File {

id String @id @default(uuid())

bucket String

fileName String @unique

originalName String

createdAt DateTime @default(now())

size Int

}- id - the unique identifier of the file in the database generated by the uuid() function.

- bucket - the name of the bucket in S3 where the file is stored, in our case it will be the same for all files.

- fileName - the name of the file in S3, it will be unique for each file. If users upload files with the same name, the new file will overwrite the old one.

- originalName - the original name of the file that the user uploaded. We will use it to display the file name to the user when downloading the file.

- createdAt - the date and time when the file was uploaded.

- size - the size of the file in bytes.

After creating the model, we need to apply the changes to the database. We can do this using db push or db migrate command, the difference between the two commands is that db push will drop the database and recreate it, while db migrate will only apply the changes to the database. More information about the commands can be found in Prisma docs (opens in a new tab). In our case it doesn't matter which command we use, so we will use db push command.

Environment variables

If we use Docker Compose to run the application for testing and development, we can store environment variables in the compose file because there is no need to keep them secret. However, in production, we should store environment variables in a .env.

Here is an example of the .env file for AWS S3 and PostgreSQL. Replace the values with your own.

DATABASE_URL="postgresql://postgres:postgres@REMOTESERVERHOST:5432/myapp-db?schema=public"

S3_ENDPOINT="s3.amazonaws.com"

S3_ACCESS_KEY="AKIAIOSFODNN7EXAMPLE"

S3_SECRET_KEY="wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY"

S3_BUCKET_NAME="my-bucket"For using Google Cloud Storage, the .env file will look like this:

S3_ENDPOINT="storage.googleapis.com"

...

Utility functions and types

Since we are using Minio S3 as the storage service, we need to install the Minio library (opens in a new tab) to interact with S3. This library is compatible with any S3-compatible storage service, including Amazon S3, Google Cloud Storage, and others.

Install the Minio library using the following command:

npm install minioThen, let's create a utility functions to upload files to Minio S3. Personally, I prefer to create a separate file for utility functions, so I will create a file s3-file-management.ts in the utils folder. Here we use included in T3 stack library env-nextjs (opens in a new tab) to validate environment variables.

import * as Minio from 'minio'

import type internal from 'stream'

import { env } from '~/env.js'

// Create a new Minio client with the S3 endpoint, access key, and secret key

export const s3Client = new Minio.Client({

endPoint: env.S3_ENDPOINT,

port: env.S3_PORT ? Number(env.S3_PORT) : undefined,

accessKey: env.S3_ACCESS_KEY,

secretKey: env.S3_SECRET_KEY,

useSSL: env.S3_USE_SSL === 'true',

})

export async function createBucketIfNotExists(bucketName: string) {

const bucketExists = await s3Client.bucketExists(bucketName)

if (!bucketExists) {

await s3Client.makeBucket(bucketName)

}

}Main page wit file list

The main page will contain the upload form and the list of uploaded files.

To get list of files from the database, we will create a function fetchFiles that sends a GET request to the API route to get the list of files from the database.

And here is the full code of the main page:

import Head from 'next/head'

import { UploadFilesS3PresignedUrl } from '~/components/UploadFilesForm/UploadFilesS3PresignedUrl'

import { FilesContainer } from '~/components/FilesContainer'

import { useState, useEffect } from 'react'

import { type FileProps } from '~/utils/types'

import { UploadFilesRoute } from '~/components/UploadFilesForm/UploadFilesRoute'

export type fileUploadMode = 's3PresignedUrl' | 'NextjsAPIEndpoint'

export default function Home() {

const [files, setFiles] = useState<FileProps[]>([])

const [uploadMode, setUploadMode] = useState<fileUploadMode>('s3PresignedUrl')

// Fetch files from the database

const fetchFiles = async () => {

const response = await fetch('/api/files')

const body = (await response.json()) as FileProps[]

// set isDeleting to false for all files after fetching

setFiles(body.map((file) => ({ ...file, isDeleting: false })))

}

// fetch files on the first render

useEffect(() => {

fetchFiles().catch(console.error)

}, [])

// determine if we should download using presigned url or Nextjs API endpoint

const downloadUsingPresignedUrl = uploadMode === 's3PresignedUrl'

// handle mode change between s3PresignedUrl and NextjsAPIEndpoint

const handleModeChange = (event: React.ChangeEvent<HTMLSelectElement>) => {

setUploadMode(event.target.value as fileUploadMode)

}

return (

<>

<Head>

<title>File Uploads with Next.js, Prisma, and PostgreSQL</title>

<meta name='description' content='File Uploads with Next.js, Prisma, and PostgreSQL ' />

<link rel='icon' href='/favicon.ico' />

</Head>

<main className='flex min-h-screen items-center justify-center gap-5 font-mono'>

<div className='container flex flex-col gap-5 px-3'>

<ModeSwitchMenu uploadMode={uploadMode} handleModeChange={handleModeChange} />

{uploadMode === 's3PresignedUrl' ? (

<UploadFilesS3PresignedUrl onUploadSuccess={fetchFiles} />

) : (

<UploadFilesRoute onUploadSuccess={fetchFiles} />

)}

<FilesContainer

files={files}

fetchFiles={fetchFiles}

setFiles={setFiles}

downloadUsingPresignedUrl={downloadUsingPresignedUrl}

/>

</div>

</main>

</>

)

}API route to get list of files from the database

To make request to the database, we need to create an API route. Create a file index.ts in the pages/api/files folder. This file will return the list of files from the database. For simplicity, we will not use pagination, we just get the 10 latest files from the database.

You can implement it using skip and take. More information about pagination can be found in the Prisma docs (opens in a new tab).

import type { NextApiRequest, NextApiResponse } from 'next'

import type { FileProps } from '~/utils/types'

import { db } from '~/server/db'

const LIMIT_FILES = 10

const handler = async (req: NextApiRequest, res: NextApiResponse) => {

// Get the 10 latest files from the database

const files = await db.file.findMany({

take: LIMIT_FILES,

orderBy: {

createdAt: 'desc',

},

select: {

id: true,

originalName: true,

size: true,

},

})

// The database type is a bit different from the frontend type

// Make the array of files compatible with the frontend type FileProps

const filesWithProps: FileProps[] = files.map((file) => ({

id: file.id,

originalFileName: file.originalName,

fileSize: file.size,

}))

return res.status(200).json(filesWithProps)

}

export default handlerMode switch menu

The mode switch menu will allow the user to switch between the two approaches for uploading files.

export type ModeSwitchMenuProps = {

uploadMode: fileUploadMode

handleModeChange: (event: React.ChangeEvent<HTMLSelectElement>) => void

}

function ModeSwitchMenu({ uploadMode, handleModeChange }: ModeSwitchMenuProps) {

return (

<ul className='flex items-center justify-center gap-2'>

<li>

<label htmlFor='uploadMode'>Upload Mode:</label>

</li>

<li>

<select

className='rounded-md border-2 border-gray-300'

id='uploadMode'

value={uploadMode}

onChange={handleModeChange}

>

<option value='s3PresignedUrl'>S3 Presigned Url</option>

<option value='NextjsAPIEndpoint'>Next.js API Endpoint</option>

</select>

</li>

</ul>

)

}File item UI

To display the files, we will create a component FileItem.tsx that will display the file name, size, and a delete button. Here is a simplified version of the file without functions to download and delete files. These functions will be added later.

import { type FileProps } from '~/utils/types'

import { LoadSpinner } from './LoadSpinner'

import { formatBytes } from '~/utils/fileUploadHelpers'

type FileItemProps = {

file: FileProps

fetchFiles: () => Promise<void>

setFiles: (files: FileProps[] | ((files: FileProps[]) => FileProps[])) => void

downloadUsingPresignedUrl: boolean

}

export function FileItem({ file, fetchFiles, setFiles, downloadUsingPresignedUrl }: FileItemProps) {

return (

<li className='relative flex items-center justify-between gap-2 border-b py-2 text-sm'>

<button

className='truncate text-blue-500 hover:text-blue-600 hover:underline '

onClick={() => downloadFile(file)}

>

{file.originalFileName}

</button>

<div className=' flex items-center gap-2'>

<span className='w-32 '>{formatBytes(file.fileSize)}</span>

<button

className='flex w-full flex-1 cursor-pointer items-center justify-center

rounded-md bg-red-500 px-4 py-2 text-white hover:bg-red-600

disabled:cursor-not-allowed disabled:opacity-50'

onClick={() => deleteFile(file.id)}

disabled={file.isDeleting}

>

Delete

</button>

</div>

{file.isDeleting && (

<div className='absolute inset-0 flex items-center justify-center rounded-md bg-gray-900 bg-opacity-20'>

<LoadSpinner size='small' />

</div>

)}

</li>

)

}File container UI

To display the files, we will create a component FileContainer.tsx that will display the list of files using the FileItem component.

import { type FilesListProps } from '~/utils/types'

import { FileItem } from './FileItem'

export function FilesContainer({ files, fetchFiles, setFiles, downloadUsingPresignedUrl }: FilesListProps) {

if (files.length === 0) {

return (

<div className='flex h-96 flex-col items-center justify-center '>

<p className='text-xl'>No files uploaded yet</p>

</div>

)

}

return (

<div className='h-96'>

<h1 className='text-xl '>

Last {files.length} uploaded file{files.length > 1 ? 's' : ''}

</h1>

<ul className='h-80 overflow-auto'>

{files.map((file) => (

<FileItem

key={file.id}

file={file}

fetchFiles={fetchFiles}

setFiles={setFiles}

downloadUsingPresignedUrl={downloadUsingPresignedUrl}

/>

))}

</ul>

</div>

)

}Upload form UI

To upload files, we will create a form with a file input field. UploadFilesFormUI.tsx will contain the UI for the upload form which will be used in both approaches. Here is a simplified version of the file:

import Link from 'next/link'

import { LoadSpinner } from '../LoadSpinner'

import { type UploadFilesFormUIProps } from '~/utils/types'

export function UploadFilesFormUI({ isLoading, fileInputRef, uploadToServer, maxFileSize }: UploadFilesFormUIProps) {

return (

<form className='flex flex-col items-center justify-center gap-3' onSubmit={uploadToServer}>

<h1 className='text-2xl'>File upload example using Next.js, MinIO S3, Prisma and PostgreSQL</h1>

{isLoading ? (

<LoadSpinner />

) : (

<div className='flex h-16 gap-5'>

<input

id='file'

type='file'

multiple

className='rounded-md border bg-gray-100 p-2 py-5'

required

ref={fileInputRef}

/>

<button

disabled={isLoading}

className='m-2 rounded-md bg-blue-500 px-5 py-2 text-white

hover:bg-blue-600 disabled:cursor-not-allowed disabled:bg-gray-400'

>

Upload

</button>

</div>

)}

</form>

)

}1.1 Upload files using Next.js API routes (4MB limit)

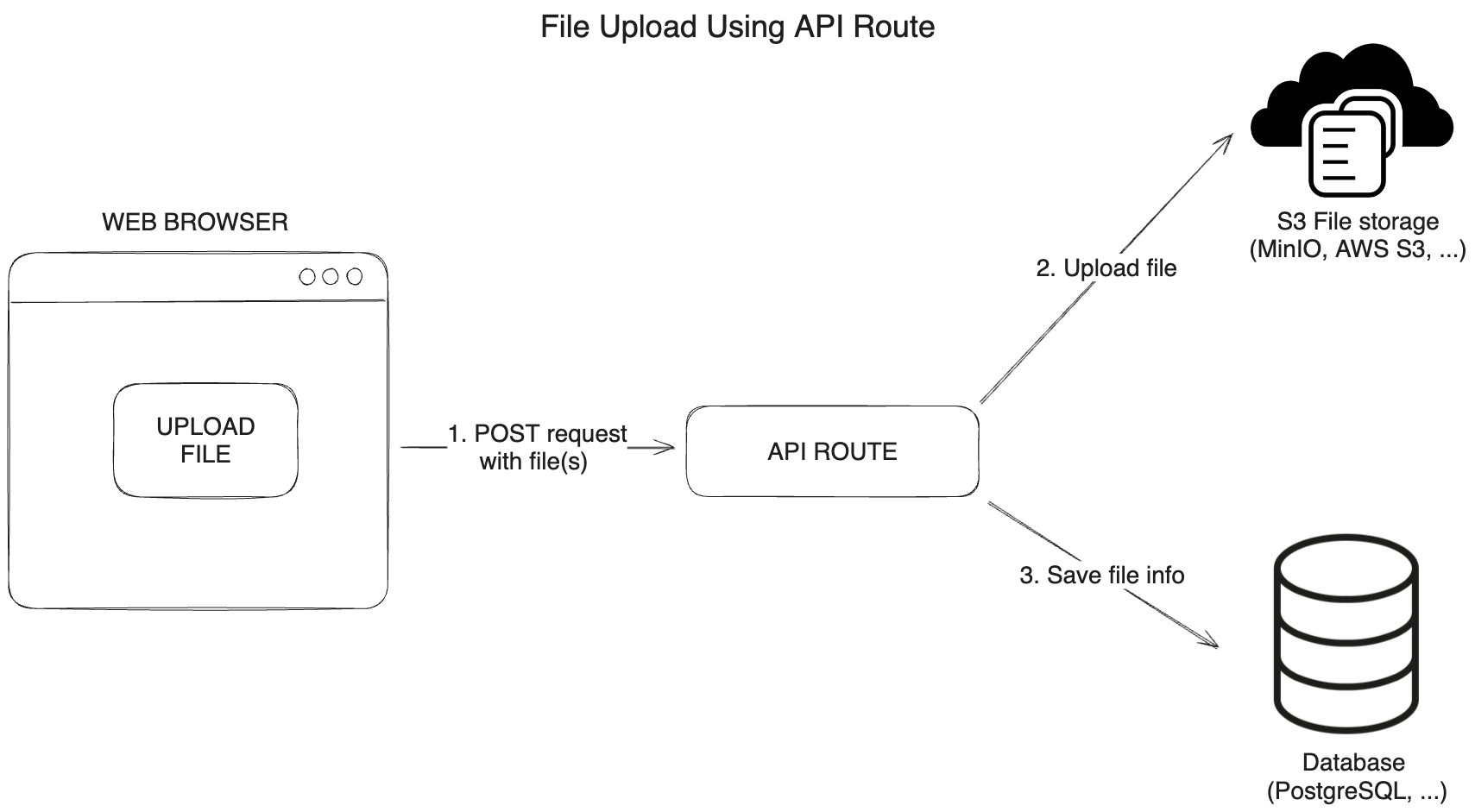

The diagram above shows the steps involved in uploading and downloading files using Next.js API routes.

To upload files:

- User sends a POST request to the API route with the file to upload.

- The API route uploads the file to S3 and returns the file name.

- The file name is saved in the database.

Frontend - Upload form logic for API routes

First, we will create a UploadFilesRoute.tsx file with the logic for the upload form.

The algorithm for uploading files to the server is as follows:

- The user selects files to upload, and the

fileInputRefis updated with the selected files. - Form data is created from the selected files using the

createFormDatafunction and FormData (opens in a new tab) API. - The form data is sent to the server using POST request to the

/api/files/upload/smallFilesroute. - The server uploads the files to S3 and returns status and message in the response.

It's usually a good idea to extract the logic of the UI component into a separate file. One way is to create hooks for the logic and use the hooks in the UI component, however, for simplicity, we will create a separate file for the logic "fileUploadHelpers.ts" and use it in the "UploadFilesRoute" component.

/**

* Create form data from files

* @param files files to upload

* @returns form data

*/

export function createFormData(files: File[]): FormData {

const formData = new FormData()

files.forEach((file) => {

formData.append('file', file)

})

return formData

}Here is a simplified version, without validation, loading state and error handling:

import { useState, useRef } from 'react'

import { validateFiles, createFormData } from '~/utils/fileUploadHelpers'

import { MAX_FILE_SIZE_NEXTJS_ROUTE } from '~/utils/fileUploadHelpers'

import { UploadFilesFormUI } from './UploadFilesFormUI'

type UploadFilesFormProps = {

onUploadSuccess: () => void

}

export function UploadFilesRoute({ onUploadSuccess }: UploadFilesFormProps) {

const fileInputRef = useRef<HTMLInputElement | null>(null)

const uploadToServer = async (event: React.FormEvent<HTMLFormElement>) => {

event.preventDefault()

const files = Object.values(fileInputRef.current?.files)

const formData = createFormData(files)

const response = await fetch('/api/files/upload/smallFiles', {

method: 'POST',

body: formData,

})

const body = (await response.json()) as {

status: 'ok' | 'fail'

message: string

}

}

return (

<UploadFilesFormUI

isLoading={isLoading}

fileInputRef={fileInputRef}

uploadToServer={uploadToServer}

maxFileSize={MAX_FILE_SIZE_NEXTJS_ROUTE}

/>

)

}Check the full code in the GitHub repository (opens in a new tab)

Backend - Upload files using Next.js API routes

1. Create utility functions to upload files using Minio S3

To upload files to S3, we will create a utility function saveFileInBucket that uses the putObject method (opens in a new tab) of the Minio client to upload the file to the S3 bucket. The function createBucketIfNotExists creates a bucket if it doesn't exist.

/**

* Save file in S3 bucket

* @param bucketName name of the bucket

* @param fileName name of the file

* @param file file to save

*/

export async function saveFileInBucket({

bucketName,

fileName,

file,

}: {

bucketName: string

fileName: string

file: Buffer | internal.Readable

}) {

// Create bucket if it doesn't exist

await createBucketIfNotExists(bucketName)

// check if file exists - optional.

// Without this check, the file will be overwritten if it exists

const fileExists = await checkFileExistsInBucket({

bucketName,

fileName,

})

if (fileExists) {

throw new Error('File already exists')

}

// Upload image to S3 bucket

await s3Client.putObject(bucketName, fileName, file)

}

/**

* Check if file exists in bucket

* @param bucketName name of the bucket

* @param fileName name of the file

* @returns true if file exists, false if not

*/

export async function checkFileExistsInBucket({ bucketName, fileName }: { bucketName: string; fileName: string }) {

try {

await s3Client.statObject(bucketName, fileName)

} catch (error) {

return false

}

return true

}2. Create an API route to upload files

Next, we will create an API route to handle file uploads. Create a file smallFiles.ts in the pages/api/files/upload folder. This file will do both the file upload and save the file name in the database.

To parse the incoming request, we will use the formidable (opens in a new tab) library. Formidable is a Node.js module for parsing form data, especially file uploads.

The algorithm for uploading files to the server

-

Get files from the request using formidable.

Then, for each file:

-

Read the file from the file path using

fs.createReadStream. -

Generate a unique file name using the nanoid (opens in a new tab) library.

-

Save the file to S3 using the

saveFileInBucketfunction that invokes theputObjectmethod of the Minio client. -

Save the file info to the database using Prisma

file.createmethod. -

Return the status and message in the response to the client.

The file upload and saving the file info to the database will be done concurrently using Promise.all. Also consider using Promise.allSettled to handle errors in the file upload and saving the file info to the database.

If an error occurs during the file upload or saving the file info to the database, we will set the status to 500 and return an error message.

import type { NextApiRequest, NextApiResponse } from 'next'

import fs from 'fs'

import { IncomingForm, type File } from 'formidable'

import { env } from '~/env'

import { saveFileInBucket } from '~/utils/s3-file-management'

import { nanoid } from 'nanoid'

import { db } from '~/server/db'

const bucketName = env.S3_BUCKET_NAME

type ProcessedFiles = Array<[string, File]>

const handler = async (req: NextApiRequest, res: NextApiResponse) => {

let status = 200,

resultBody = { status: 'ok', message: 'Files were uploaded successfully' }

// Get files from request using formidable

const files = await new Promise<ProcessedFiles | undefined>((resolve, reject) => {

const form = new IncomingForm()

const files: ProcessedFiles = []

form.on('file', function (field, file) {

files.push([field, file])

})

form.on('end', () => resolve(files))

form.on('error', (err) => reject(err))

form.parse(req, () => {

//

})

}).catch(() => {

;({ status, resultBody } = setErrorStatus(status, resultBody))

return undefined

})

if (files?.length) {

// Upload files to S3 bucket

try {

await Promise.all(

files.map(async ([_, fileObject]) => {

const file = fs.createReadStream(fileObject?.filepath)

// generate unique file name

const fileName = `${nanoid(5)}-${fileObject?.originalFilename}`

// Save file to S3 bucket and save file info to database concurrently

await saveFileInBucket({

bucketName,

fileName,

file,

})

// save file info to database

await db.file.create({

data: {

bucket: bucketName,

fileName,

originalName: fileObject?.originalFilename ?? fileName,

size: fileObject?.size ?? 0,

},

})

})

)

} catch (e) {

console.error(e)

;({ status, resultBody } = setErrorStatus(status, resultBody))

}

}

res.status(status).json(resultBody)

}

// Set error status and result body if error occurs

export function setErrorStatus(status: number, resultBody: { status: string; message: string }) {

status = 500

resultBody = {

status: 'fail',

message: 'Upload error',

}

return { status, resultBody }

}

// Disable body parser built-in to Next.js to allow formidable to work

export const config = {

api: {

bodyParser: false,

},

}

export default handlerRemember to include export const config, this prevents built-in body parser of Next.js from parsing the request body, which allows formidable to work.

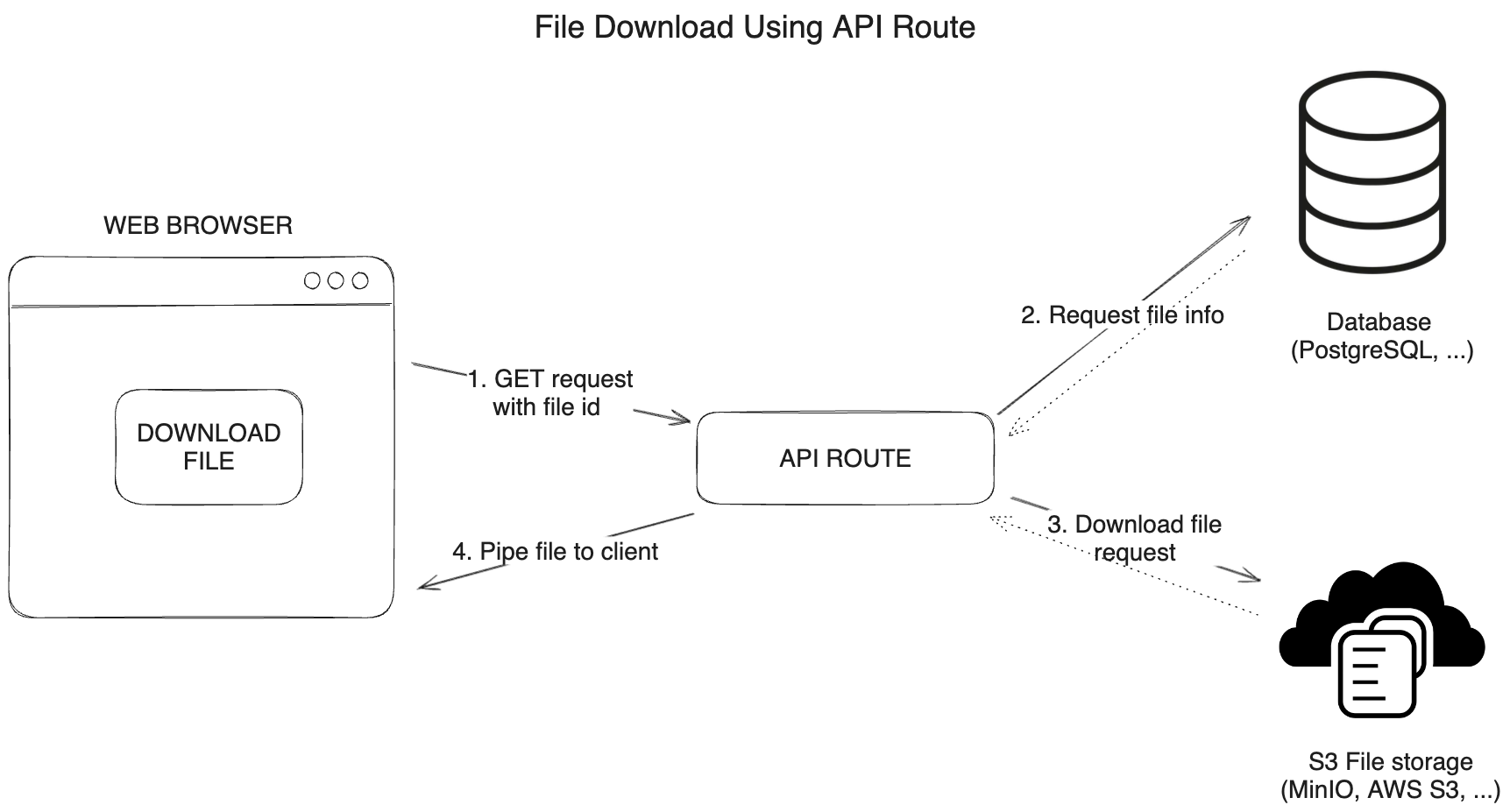

1.2 Download files using Next.js API routes (4MB limit)

To download files:

- User sends a GET request to the API route with the file id to download.

- The API route requests the file name from the database.

- The API route downloads the file from S3.

- The file is piped to the response object and returned to the client.

Frontend - Download files using Next.js API routes

To download files, we will create a function downloadFile inside of the FileItem component. The function sends a GET request to the API route to download the file from S3. The file is returned to the user from the API route.

const downloadFile = async (file: FileProps) => {

window.open(`/api/files/download/smallFiles/${file.id}`, '_blank')

}Backend - Download files using Next.js API routes

1. Create a utility function to download files from S3

To download files from S3, we will create a utility function getFileFromBucket that uses the getObject method (opens in a new tab) of the Minio client to download the file from the S3 bucket.

/**

* Get file from S3 bucket

* @param bucketName name of the bucket

* @param fileName name of the file

* @returns file from S3

*/

export async function getFileFromBucket({ bucketName, fileName }: { bucketName: string; fileName: string }) {

try {

await s3Client.statObject(bucketName, fileName)

} catch (error) {

console.error(error)

return null

}

return await s3Client.getObject(bucketName, fileName)

}2. Create an API route to download files

To download files, we will create an API route to handle file downloads. Create a file [id].ts in the pages/api/files/download/ folder. This file will download the file from S3 and return it to the user.

Here we use a dynamic route of Next.js with [id] to get the file id from the request query. More information about dynamic routes can be found in the Next.js docs (opens in a new tab).

The algorithm for downloading files from the server is as follows:

- Get the file name and original name from the database using Prisma

file.findUniquemethod. - Get the file from the S3 bucket using the

getFileFromBucketfunction. - Set the header for downloading the file.

- Pipe the file to the response object.

import { type NextApiRequest, type NextApiResponse } from 'next'

import { getFileFromBucket } from '~/utils/s3-file-management'

import { env } from '~/env'

import { db } from '~/server/db'

async function handler(req: NextApiRequest, res: NextApiResponse) {

const { id } = req.query

if (typeof id !== 'string') return res.status(400).json({ message: 'Invalid request' })

// get the file name and original name from the database

const fileObject = await db.file.findUnique({

where: {

id,

},

select: {

fileName: true,

originalName: true,

},

})

if (!fileObject) {

return res.status(404).json({ message: 'Item not found' })

}

// get the file from the bucket and pipe it to the response object

const data = await getFileFromBucket({

bucketName: env.S3_BUCKET_NAME,

fileName: fileObject?.fileName,

})

if (!data) {

return res.status(404).json({ message: 'Item not found' })

}

// set header for download file

res.setHeader('content-disposition', `attachment; filename="${fileObject?.originalName}"`)

// pipe the data to the res object

data.pipe(res)

}

export default handler2.1 Upload files using presigned URLs

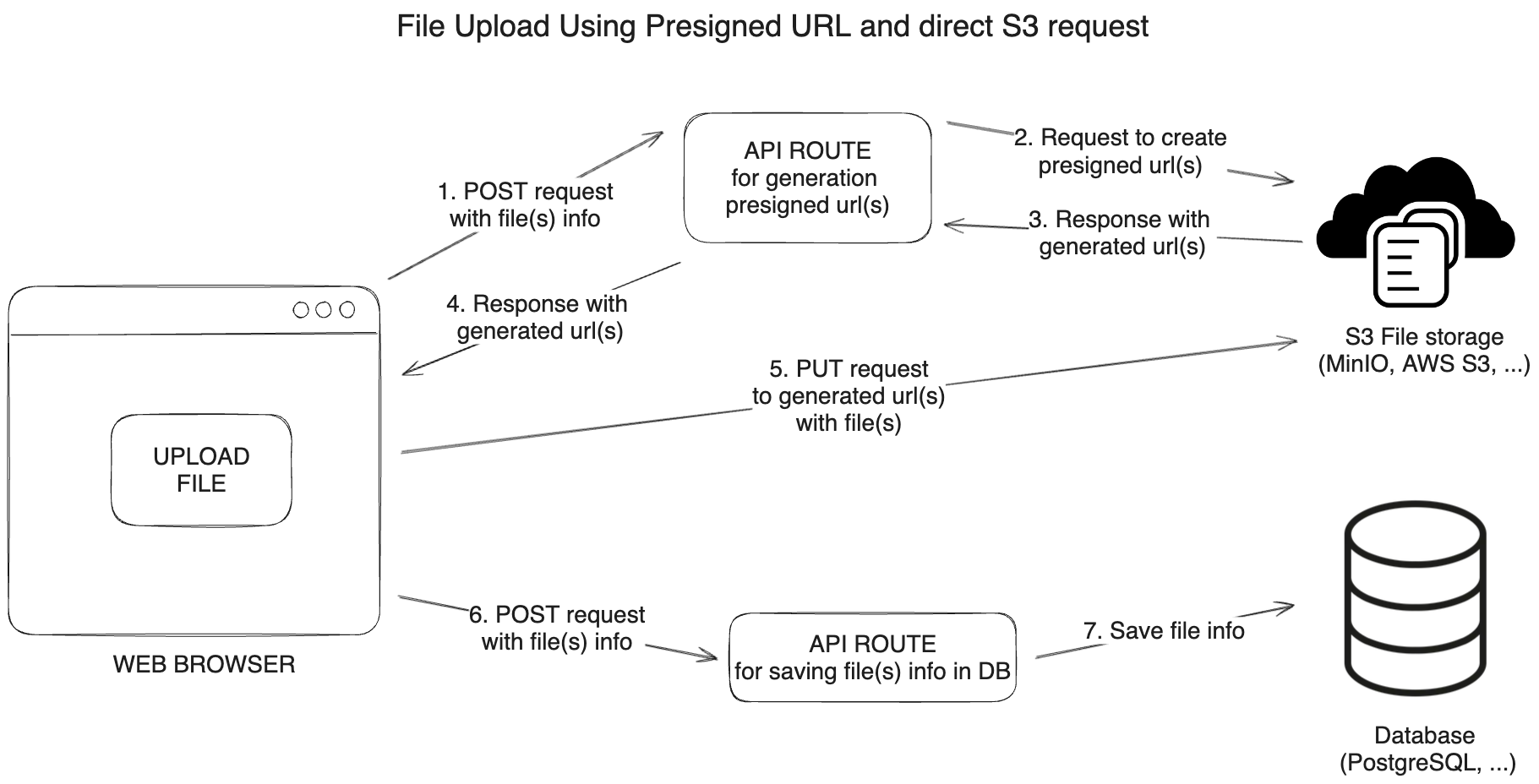

In the diagram above, we can see the steps involved in uploading and downloading files using presigned URLs. It is a more complex approach, but it does not use resources on the Next.js server with file uploads. The presigned URL is generated on the server and sent to the client. The client uses the presigned URL to upload the file directly to S3.

To upload files:

- The user sends a POST request to the API route with the file info to upload.

- The API route sends requests to S3 to generate presigned URLs for each file.

- The S3 returns the presigned URLs to the API route.

- The API route sends the presigned URLs to the client.

- The client uploads the files directly to S3 using the presigned URLs and PUT requests.

- The client sends the file info to the API route to save the file info.

- The API route saves the file info to the database.

Frontend - Upload form logic for presigned URLs

1. Create function to send request to Next.js API route to get presigned URLs

/**

* Gets presigned urls for uploading files to S3

* @param formData form data with files to upload

* @returns

*/

export const getPresignedUrls = async (files: ShortFileProp[]) => {

const response = await fetch('/api/files/upload/presignedUrl', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify(files),

})

return (await response.json()) as PresignedUrlProp[]

}2. Create function to upload files using PUT request to S3 with presigned URL

The function uploadToS3 sends a PUT request to the presigned URL to upload the file to S3.

/**

* Uploads file to S3 directly using presigned url

* @param presignedUrl presigned url for uploading

* @param file file to upload

* @returns response from S3

*/

export const uploadToS3 = async (presignedUrl: PresignedUrlProp, file: File) => {

const response = await fetch(presignedUrl.url, {

method: 'PUT',

body: file,

headers: {

'Content-Type': file.type,

'Access-Control-Allow-Origin': '*',

},

})

return response

}3. Create function to save file info in the database

The function saveFileInfoInDB sends a POST request to the API route to save the file info in the database.

/**

* Saves file info in DB

* @param presignedUrls presigned urls for uploading

* @returns

*/

export const saveFileInfoInDB = async (presignedUrls: PresignedUrlProp[]) => {

return await fetch('/api/files/upload/saveFileInfo', {

method: 'POST',

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify(presignedUrls),

})

}4. Create a form to upload files using presigned URLs

/**

* Uploads files to S3 and saves file info in DB

* @param files files to upload

* @param presignedUrls presigned urls for uploading

* @param onUploadSuccess callback to execute after successful upload

* @returns

*/

export const handleUpload = async (files: File[], presignedUrls: PresignedUrlProp[], onUploadSuccess: () => void) => {

const uploadToS3Response = await Promise.all(

presignedUrls.map((presignedUrl) => {

const file = files.find(

(file) => file.name === presignedUrl.originalFileName && file.size === presignedUrl.fileSize

)

if (!file) {

throw new Error('File not found')

}

return uploadToS3(presignedUrl, file)

})

)

if (uploadToS3Response.some((res) => res.status !== 200)) {

alert('Upload failed')

return

}

await saveFileInfoInDB(presignedUrls)

onUploadSuccess()

}5. Create a form to upload files using presigned URLs using functions from the previous steps

Here I show a simplified version of the file, without validation, loading state, and error handling.

import { useState, useRef } from 'react'

import { validateFiles, MAX_FILE_SIZE_S3_ENDPOINT, handleUpload, getPresignedUrls } from '~/utils/fileUploadHelpers'

import { UploadFilesFormUI } from './UploadFilesFormUI'

import { type ShortFileProp } from '~/utils/types'

type UploadFilesFormProps = {

onUploadSuccess: () => void

}

export function UploadFilesS3PresignedUrl({ onUploadSuccess }: UploadFilesFormProps) {

const fileInputRef = useRef<HTMLInputElement | null>(null)

const [isLoading, setIsLoading] = useState(false)

const uploadToServer = async (event: React.FormEvent<HTMLFormElement>) => {

event.preventDefault()

// get File[] from FileList

const files = Object.values(fileInputRef.current.files)

// validate files

const filesInfo: ShortFileProp[] = files.map((file) => ({

originalFileName: file.name,

fileSize: file.size,

}))

const presignedUrls = await getPresignedUrls(filesInfo)

// upload files to s3 endpoint directly and save file info to db

await handleUpload(files, presignedUrls, onUploadSuccess)

setIsLoading(false)

}

return (

<UploadFilesFormUI

isLoading={isLoading}

fileInputRef={fileInputRef}

uploadToServer={uploadToServer}

maxFileSize={MAX_FILE_SIZE_S3_ENDPOINT}

/>

)

}Backend - Upload files using presigned URLs

1. Create helper function to generate presigned URLs for uploading files to S3

/**

* Generate presigned urls for uploading files to S3

* @param files files to upload

* @returns promise with array of presigned urls

*/

export async function createPresignedUrlToUpload({

bucketName,

fileName,

expiry = 60 * 60, // 1 hour

}: {

bucketName: string

fileName: string

expiry?: number

}) {

// Create bucket if it doesn't exist

await createBucketIfNotExists(bucketName)

return await s3Client.presignedPutObject(bucketName, fileName, expiry)

}2. Create an API route to send requests to S3 to generate presigned URLs for each file

In this approach, we do not use formidable to parse the incoming request, so we do not need to disable the built-in body parser of Next.js. We can use the default body parser.

The algorithm for generating presigned URLs for uploading files to S3:

- Get the files info from the request body.

- Check if there are files to upload.

- Create an empty array to store the presigned URLs.

For each file:

- Generate a unique file name using the nanoid library.

- Get the presigned URL using the

createPresignedUrlToUploadfunction. - Add the presigned URL to the array.

Then return the array of presigned URLs in the response to the client.

import type { NextApiRequest, NextApiResponse } from 'next'

import type { ShortFileProp, PresignedUrlProp } from '~/utils/types'

import { createPresignedUrlToUpload } from '~/utils/s3-file-management'

import { env } from '~/env'

import { nanoid } from 'nanoid'

const bucketName = env.S3_BUCKET_NAME

const expiry = 60 * 60 // 24 hours

export default async function handler(req: NextApiRequest, res: NextApiResponse) {

if (req.method !== 'POST') {

res.status(405).json({ message: 'Only POST requests are allowed' })

return

}

// get the files from the request body

const files = req.body as ShortFileProp[]

if (!files?.length) {

res.status(400).json({ message: 'No files to upload' })

return

}

const presignedUrls = [] as PresignedUrlProp[]

if (files?.length) {

// use Promise.all to get all the presigned urls in parallel

await Promise.all(

// loop through the files

files.map(async (file) => {

const fileName = `${nanoid(5)}-${file?.originalFileName}`

// get presigned url using s3 sdk

const url = await createPresignedUrlToUpload({

bucketName,

fileName,

expiry,

})

// add presigned url to the list

presignedUrls.push({

fileNameInBucket: fileName,

originalFileName: file.originalFileName,

fileSize: file.fileSize,

url,

})

})

)

}

res.status(200).json(presignedUrls)

}3. Create an API route to save file info in the database with Prisma

The algorithm for saving file info in the database:

- Get the file info from the request body.

- Save the file info to the database using the Prisma

file.createmethod. - Return the status and message in the response to the client.

import type { NextApiRequest, NextApiResponse } from 'next'

import { env } from '~/env'

import { db } from '~/server/db'

import type { PresignedUrlProp, FileInDBProp } from '~/utils/types'

export default async function handler(req: NextApiRequest, res: NextApiResponse) {

if (req.method !== 'POST') {

res.status(405).json({ message: 'Only POST requests are allowed' })

return

}

const presignedUrls = req.body as PresignedUrlProp[]

// Get the file name in bucket from the database

const saveFilesInfo = await db.file.createMany({

data: presignedUrls.map((file: FileInDBProp) => ({

bucket: env.S3_BUCKET_NAME,

fileName: file.fileNameInBucket,

originalName: file.originalFileName,

size: file.fileSize,

})),

})

if (saveFilesInfo) {

res.status(200).json({ message: 'Files saved successfully' })

} else {

res.status(404).json({ message: 'Files not found' })

}

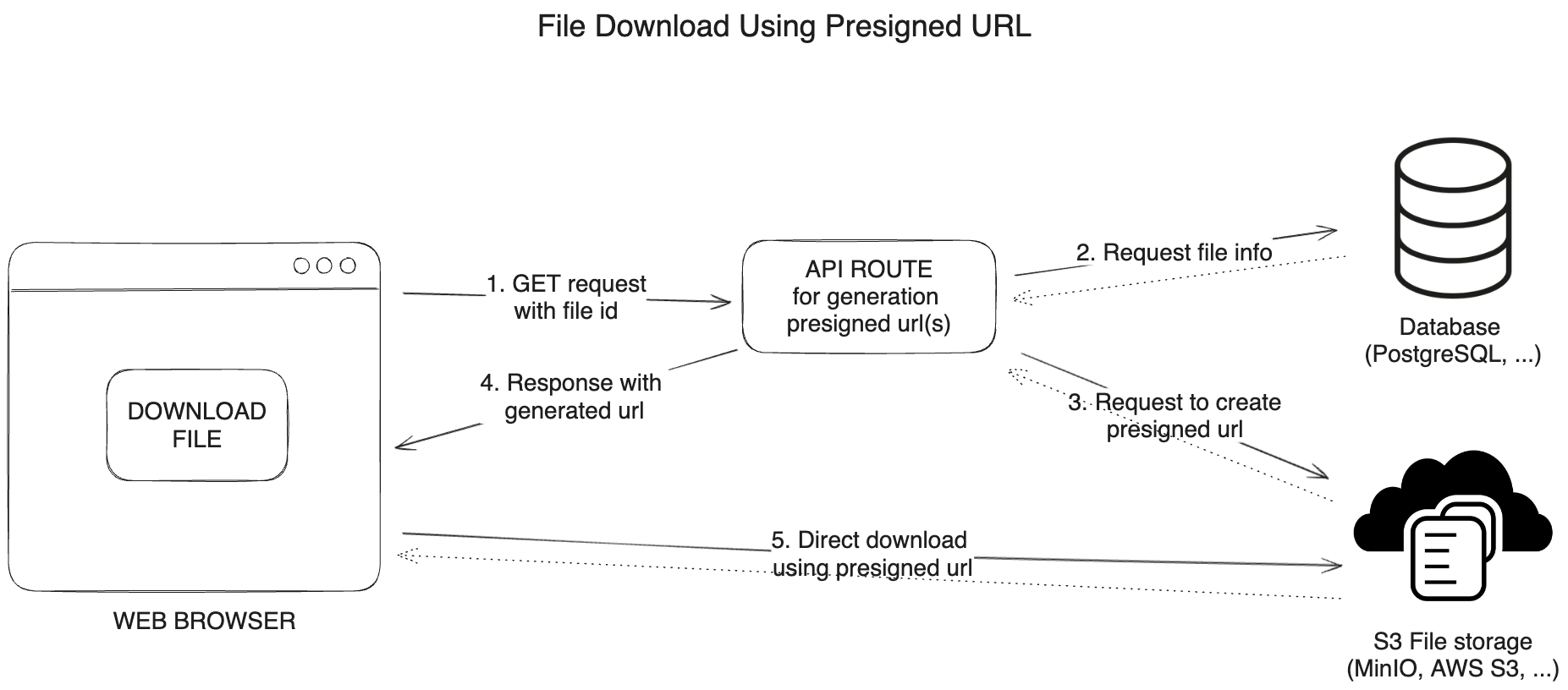

}2.2 Download files using presigned URLs

To download files:

- The user sends a GET request with file id to the API route to get file.

- The API route sends a request to the database to get the file name and receives the file name.

- The API route sends a request to S3 to generate a presigned URL for the file and receives the presigned URL.

- The API route sends the presigned URL to the client.

- The client downloads the file directly from S3 using the presigned URL.

Frontend - Download files using presigned URLs

To download files, we will create a function downloadFile inside of the FileItem component. The function sends a GET request to the API route to get the presigned URL for the file from S3. The file is returned to the user from the API route.

async function getPresignedUrl(file: FileProps) {

const response = await fetch(`/api/files/download/presignedUrl/${file.id}`)

return (await response.json()) as string

}

const downloadFile = async (file: FileProps) => {

const presignedUrl = await getPresignedUrl(file)

window.open(presignedUrl, '_blank')

}Backend - Download files using presigned URLs

1. Create helper function to generate presigned URLs for downloading files from S3

To download files from S3, we will create a utility function createPresignedUrlToDownload that uses the presignedGetObject method (opens in a new tab) of the Minio client to generate a presigned URL for the file.

export async function createPresignedUrlToDownload({

bucketName,

fileName,

expiry = 60 * 60, // 1 hour

}: {

bucketName: string

fileName: string

expiry?: number

}) {

return await s3Client.presignedGetObject(bucketName, fileName, expiry)

}2. Create an API route to send requests to S3 to generate presigned URLs for each file

The algorithm for generating presigned URLs for downloading files from S3:

- Get the file name from the database using the file id.

- Get the presigned URL using the

createPresignedUrlToDownloadfunction. - Return the presigned URL in the response to the client.

import type { NextApiRequest, NextApiResponse } from 'next'

import { createPresignedUrlToDownload } from '~/utils/s3-file-management'

import { db } from '~/server/db'

import { env } from '~/env'

/**

* This route is used to get presigned url for downloading file from S3

*/

export default async function handler(req: NextApiRequest, res: NextApiResponse) {

if (req.method !== 'GET') {

res.status(405).json({ message: 'Only GET requests are allowed' })

}

const { id } = req.query

if (!id || typeof id !== 'string') {

return res.status(400).json({ message: 'Missing or invalid id' })

}

// Get the file name in bucket from the database

const fileObject = await db.file.findUnique({

where: {

id,

},

select: {

fileName: true,

},

})

if (!fileObject) {

return res.status(404).json({ message: 'Item not found' })

}

// Get presigned url from s3 storage

const presignedUrl = await createPresignedUrlToDownload({

bucketName: env.S3_BUCKET_NAME,

fileName: fileObject?.fileName,

})

res.status(200).json(presignedUrl)

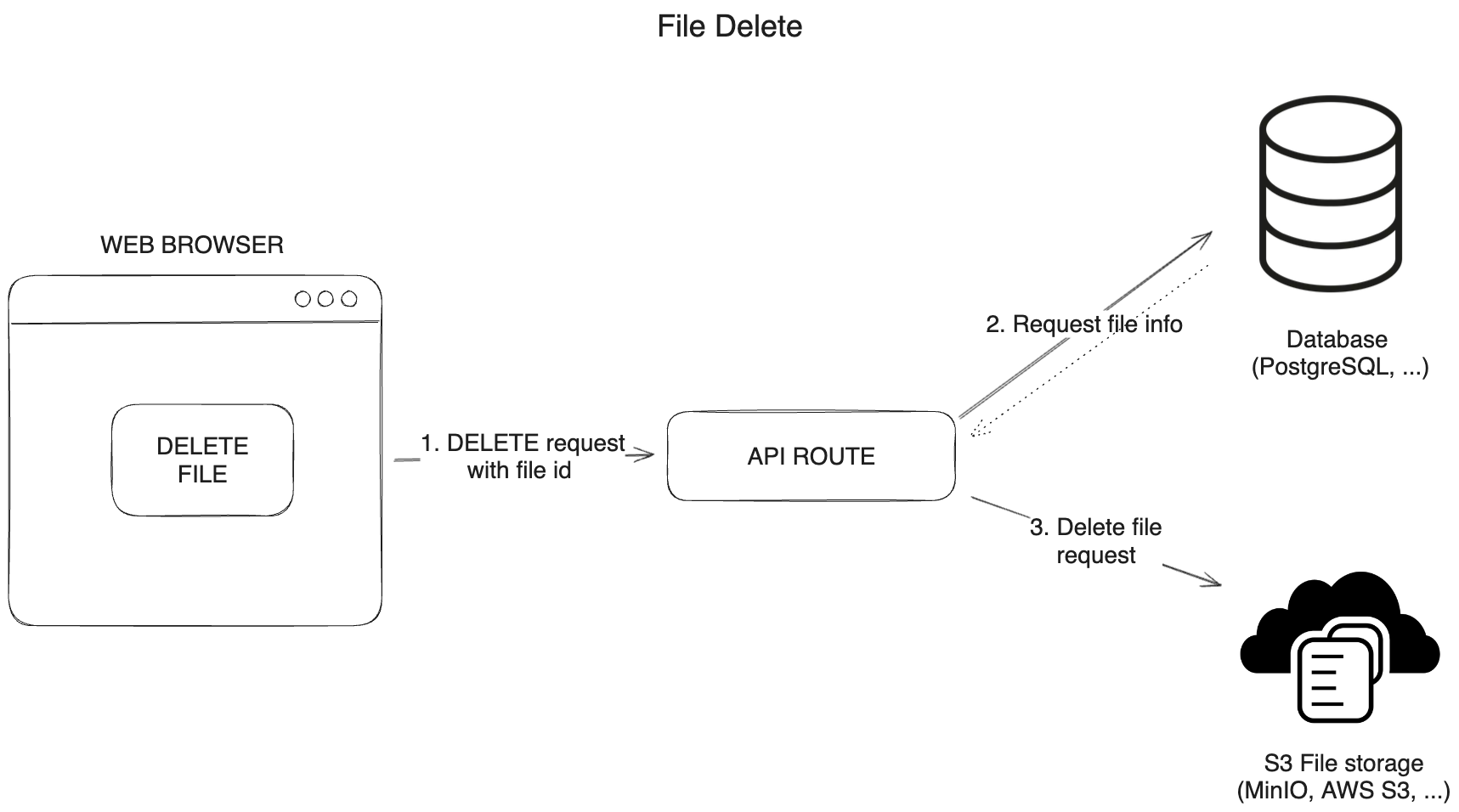

}3. Delete files from S3

Frontend - Delete files from S3

File deletion can be done using a DELETE request to the API route. I created a delete function in FileItem component, which sends a DELETE request to the API route to delete the file from the S3 bucket and the database.

The algorithm for deleting files from S3:

- Remove the file from the list of files on the client immediately.

- Send a DELETE request to the API route to delete the file from the S3 bucket and the database.

- Fetch the files after deleting.

Here is an example of the delete function in the FileItem component:

async function deleteFile(id: string) {

// remove file from the list of files on the client

setFiles((files: FileProps[]) =>

files.map((file: FileProps) => (file.id === id ? { ...file, isDeleting: true } : file))

)

try {

// delete file request to the server

await fetch(`/api/files/delete/${id}`, {

method: 'DELETE',

})

// fetch files after deleting

await fetchFiles()

} catch (error) {

console.error(error)

alert('Failed to delete file')

} finally {

// remove isDeleting flag from the file

setFiles((files: FileProps[]) =>

files.map((file: FileProps) => (file.id === id ? { ...file, isDeleting: false } : file))

)

}

}Backend - Delete files from S3

1. Create a utility function to delete files from S3

To delete files from S3, we will create a utility function deleteFileFromBucket that uses the removeObject method (opens in a new tab) of the Minio client to delete the file from the S3 bucket.

/**

* Delete file from S3 bucket

* @param bucketName name of the bucket

* @param fileName name of the file

* @returns true if file was deleted, false if not

*/

export async function deleteFileFromBucket({ bucketName, fileName }: { bucketName: string; fileName: string }) {

try {

await s3Client.removeObject(bucketName, fileName)

} catch (error) {

console.error(error)

return false

}

return true

}2. Create an API route to delete files from S3

Here is an example of an API route to delete files from S3. Create a file delete/[id].ts in the pages/api/files/delete folder. This file will delete the file from the S3 bucket and the database.

The algorithm for deleting files from S3:

- Get the file name in the bucket from the database using the file id.

- Check if the file exists in the database.

- Delete the file from the S3 bucket using the

deleteFileFromBucketfunction. - Delete the file from the database using the Prisma

file.deletemethod. - Return the status and message in the response to the client.

import type { NextApiRequest, NextApiResponse } from 'next'

import { deleteFileFromBucket } from '~/utils/s3-file-management'

import { db } from '~/server/db'

import { env } from '~/env'

export default async function handler(req: NextApiRequest, res: NextApiResponse) {

if (req.method !== 'DELETE') {

res.status(405).json({ message: 'Only DELETE requests are allowed' })

}

const { id } = req.query

if (!id || typeof id !== 'string') {

return res.status(400).json({ message: 'Missing or invalid id' })

}

// Get the file name in bucket from the database

const fileObject = await db.file.findUnique({

where: {

id,

},

select: {

fileName: true,

},

})

if (!fileObject) {

return res.status(404).json({ message: 'Item not found' })

}

// Delete the file from the bucket

await deleteFileFromBucket({

bucketName: env.S3_BUCKET_NAME,

fileName: fileObject?.fileName,

})

// Delete the file from the database

const deletedItem = await db.file.delete({

where: {

id,

},

})

if (deletedItem) {

res.status(200).json({ message: 'Item deleted successfully' })

} else {

res.status(404).json({ message: 'Item not found' })

}

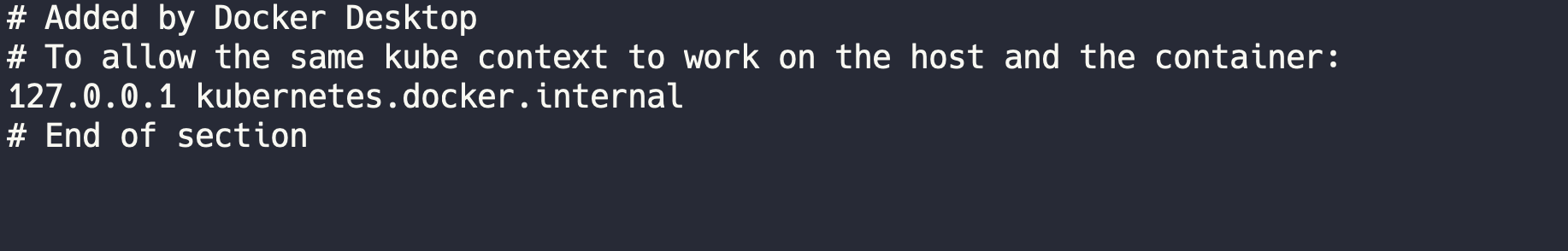

}Deploy locally using Docker Compose

To deploy the application locally, we will use Docker Compose to run the Next.js app, PostgreSQL, and MinIO S3. I explained how to set up the Docker Compose file in the previous article (opens in a new tab).

Here I want to mention some changes to the Docker Compose file we need to make because we need to use presigned URLs.

The key point is - when presigned url is created inside the docker container, it will have the same host as we set in the docker compose file. For example, if we have environment: - S3_ENDPOINT=minio in the docker compose file, the presigned url will have the host minio. All presigned urls will be like http://minio:9000/bucket-name/file-name.

These urls will not work on the client side (if we do not add minio to the hosts file). Here we cannot use localhost either, because localhost will be the host of the container, not the host of the client.

The solution is to use the kubernetes.docker.internal as the Minio S3 endpoint. This is a special DNS name that resolves to the host machine from inside a Docker container. It is available on Docker for Mac and Docker for Windows.

Also make sure that kubernetes.docker.internal is in the hosts file (it should be there by default). Then the presigned urls will be like http://kubernetes.docker.internal:9000/bucket-name/file-name and will work on the client side.

Here is the full Docker Compose file:

version: '3.9'

name: nextjs-postgres-s3minio

services:

web:

container_name: nextjs

build:

context: ../

dockerfile: compose/web.Dockerfile

args:

NEXT_PUBLIC_CLIENTVAR: 'clientvar'

ports:

- 3000:3000

volumes:

- ../:/app

environment:

- DATABASE_URL=postgresql://postgres:postgres@db:5432/myapp-db?schema=public

- S3_ENDPOINT=kubernetes.docker.internal

- S3_PORT=9000

- S3_ACCESS_KEY=minio

- S3_SECRET_KEY=miniosecret

- S3_BUCKET_NAME=s3bucket

depends_on:

- db

- minio

# Optional, if you want to apply db schema from prisma to postgres

command: sh ./compose/db-push-and-start.sh

db:

image: postgres:15.3

container_name: postgres

ports:

- 5432:5432

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

POSTGRES_DB: myapp-db

volumes:

- postgres-data:/var/lib/postgresql/data

restart: unless-stopped

minio:

container_name: s3minio

image: bitnami/minio:latest

ports:

- '9000:9000'

- '9001:9001'

volumes:

- minio_storage:/data

volumes:

postgres-data:

minio_storage:To run the application, use the following command:

docker-compose -f compose/docker-compose.yml --env-file .env upAfter you run the application, you can access it at http://localhost:3000.

The Minio S3 will be available at http://localhost:9000. You can use the access key minio and the secret key miniosecret to log in.

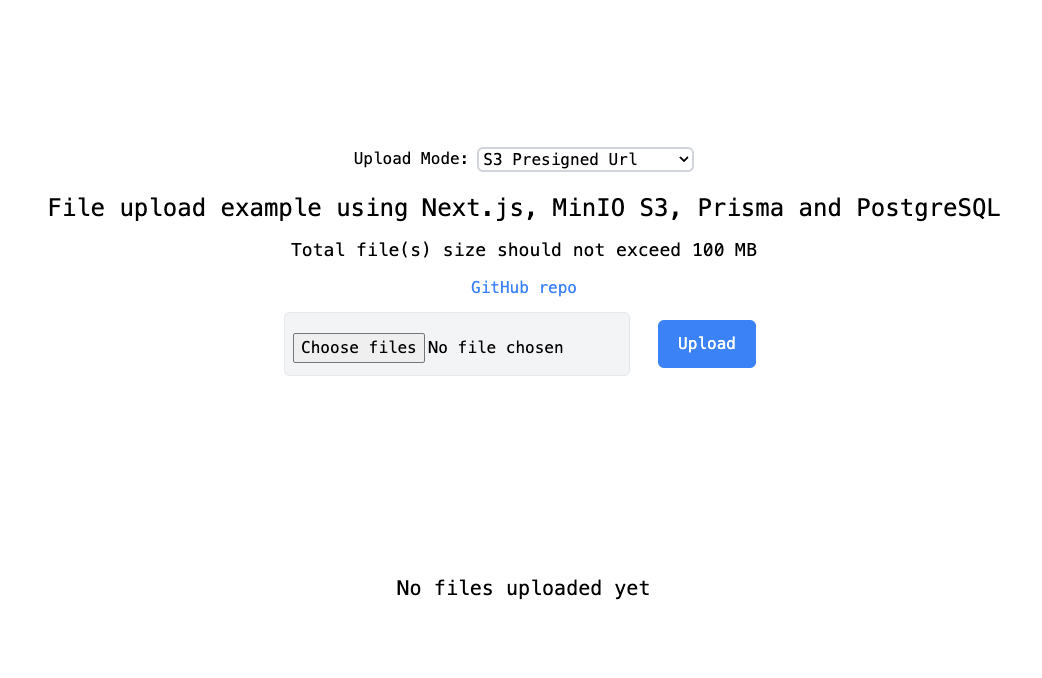

You will see something like this:

The next step would be to deploy the application to a cloud provider. I will cover this in the next article.

Conclusion

In this article, we learned how to upload and download files using Next.js, PostgreSQL, and Minio S3. We also learned how to use presigned URLs to upload and download files directly from the client to S3. We created an application to upload, download, and delete files from S3 using presigned URLs. We also learned how to deploy the application locally using Docker Compose.

Hope you found this article helpful. If you have any questions or suggestions, feel free to leave a comment.